In a past gig, I was tasked to evaluate container schedulers. The usual suspects Kubernetes and Nomad were the contenders. One huge caveat with the whole exercise is that containers are being scheduled within AWS. You have EKS and ECS but can’t move it to on-premises infrastructure in case an inexplicable reason comes up. Looking closely at these schedulers, they are designed for bare-metal fleets. If you have servers across multiple data centers, like a CDN, then Kubernetes is the best out there. These schedulers introduce necessary complexity for what they can do. Inter-cloud benefits are dubious at best. I imagine AWS scaling plus whatever the AI, sorry scheduler, decides to do can be an ordeal as you watch them fight it out.

I went with what I thought would introduce the least friction. Podman on the EC2 distro of choice was what I ended up with. Nomad was close call, it had experimental Podman support. Docker is more popular but there are footguns littered across the vestiges. Maybe it’s a good choice for a developer not familiar with Linux plumbing underneath. Podman is objectively cleaner and smaller. The system is non-standard but that doesn’t matter much for systems of such size. Without proper knowledge retention and transfer within an organization, it’s futile whatever it does. No need for a team holding certifications in Kubernetes. Any DevOps person worth his or her carbon footprint can figure it out and manage or even improve it.

My weird solution

From my experience with facilitating that marriage of Podman and systemd, I think a more elaborate daemon for deploying containers is in order. Think a Docker daemon but calls to Podman and systemd for the hard work. It’s called iyclo.

You could just use Docker. It has more features and a more mature product. I’m doing this because it’s my weird solution. Like most of my projects, nobody else uses them. I might get to know more about containers, practice my development muscles, or just have fun along the way. Anybody that’s already doing a variant of the podman+systemd pattern might find some utility in this project.

Initial ideas

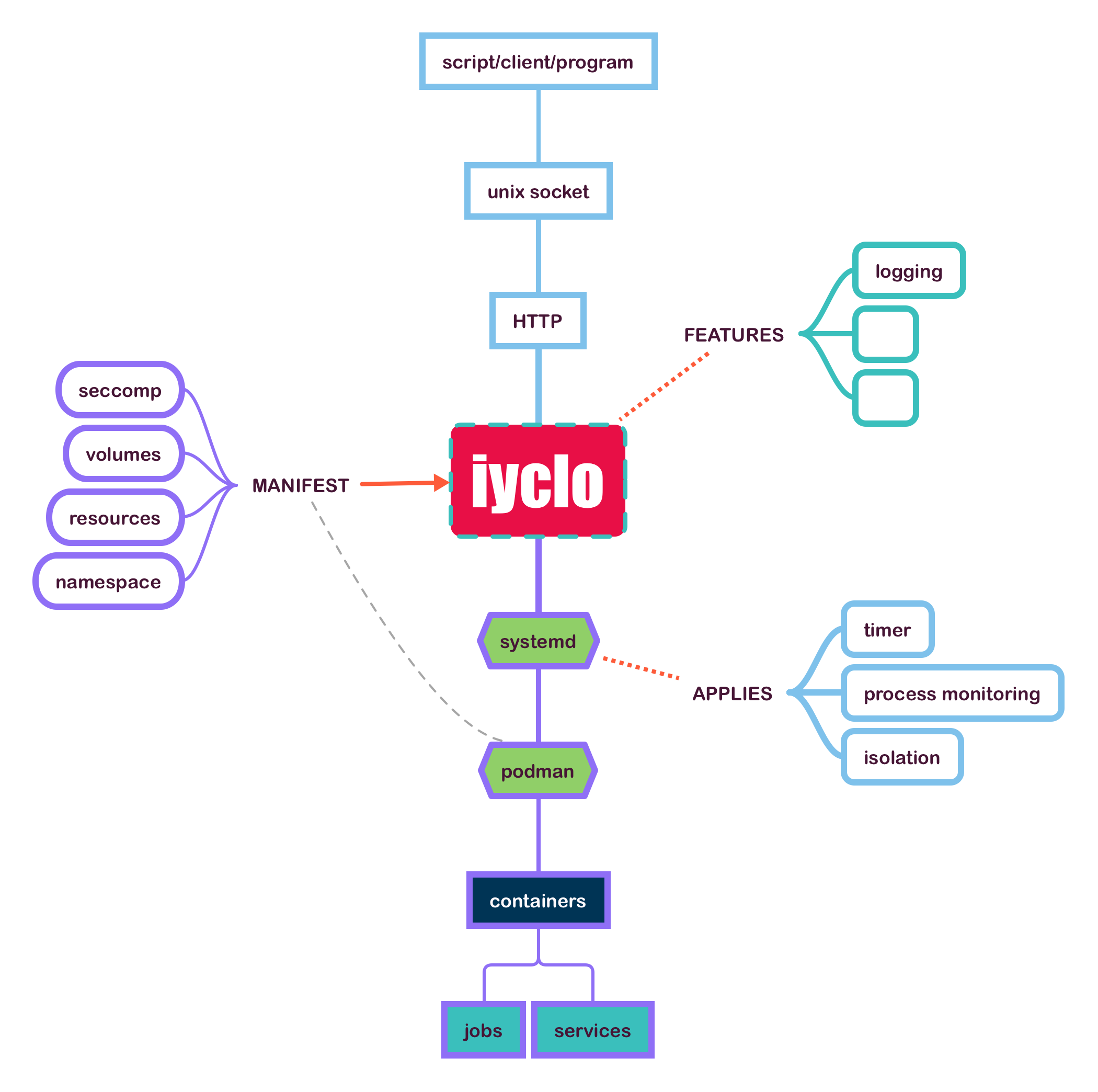

Looking at the above figure gives a rough idea of what I’m aiming at. We have a client program or even a Terraform module that connects to the API via a daemon socket. The manifest is a JSON payload that instructs how iyclo provisions the containers with podman and systemd. Podman handles the container attributes such as sandboxing and resources. Seccomp filters or capability dropping are considered sandboxing. Resources can be CPU or memory. Monitoring and launching the podman executable is done by systemd. It can also do some pre-execution sandboxing or isolation. Jobs are scheduled through systemd timers. Instead of services, I think pods is a better name. I’m going to change it on later

diagrams.

Current status

The project page is at github/iyclo. For the curious, iyclo is an anagram of oilyc, or oily containers. Sounds ridiculous alright. Anyway, I wanted a unique name for it.

The entrypoint of the program is through the command line. I have chosen the clir Go package to handle the command

line options. It’s very light and does what I need from it. Using it for passing flags like the log file, storage path, and socket

path. The REST API will be handled by Labstack echo and LadyLua. Any storage requirement is handled by the bitcask package. The bulk of the code will be written in Lua. I don’t see a

problem with writing a CRUD application or a small REST API in Lua. Everything can be converted to Go if an unforeseen circumstance arises. The STDOUT and STDERR of the program are taken over by echo output. The actual logging will be handled through zerolog which logs

to a file.

This is the first part of a series chronicling iyclo. I’m figuring out the REST API at the moment. The next entry would likely include details about the API.